Pyspark Documentation 2.3

Pyspark Documentation 2.3 - Keeping kids occupied can be challenging, especially on hectic schedules. Having a stash of printable worksheets on hand makes it easier to keep them learning without much planning or screen time.

Explore a Variety of Pyspark Documentation 2.3

Whether you're doing a quick lesson or just want an educational diversion, free printable worksheets are a great tool. They cover everything from math and reading to games and coloring pages for all ages.

Pyspark Documentation 2.3

Most worksheets are easy to access and use right away. You don’t need any special supplies—just a printer and a few minutes to set things up. It’s simple, quick, and practical.

With new themes added all the time, you can always find something fresh to try. Just download your favorite worksheets and turn learning into fun without the stress.

Synod Staff

Jul 12 2017 nbsp 0183 32 Fill a column in pyspark dataframe by comparing the data between two different columns in the same dataframe 2 PySpark how to create a column based on rows values Sep 22, 2015 · Right now, I have to use df.count > 0 to check if the DataFrame is empty or not. But it is kind of inefficient. Is there any better way to do that? PS: I want to check if it's empty so …

Sade Hueforge By Drmihalypeter MakerWorld

Pyspark Documentation 2.3from pyspark.sql import functions as F # This one won't work for directly passing to from_json as it ignores top-level arrays in json strings # (if any)! # json_object_schema = … Jun 28 2018 nbsp 0183 32 As suggested by pault the data field is a string field since the keys are the same i e key1 key2 in the JSON string over rows you might also use json tuple this function

Gallery for Pyspark Documentation 2.3

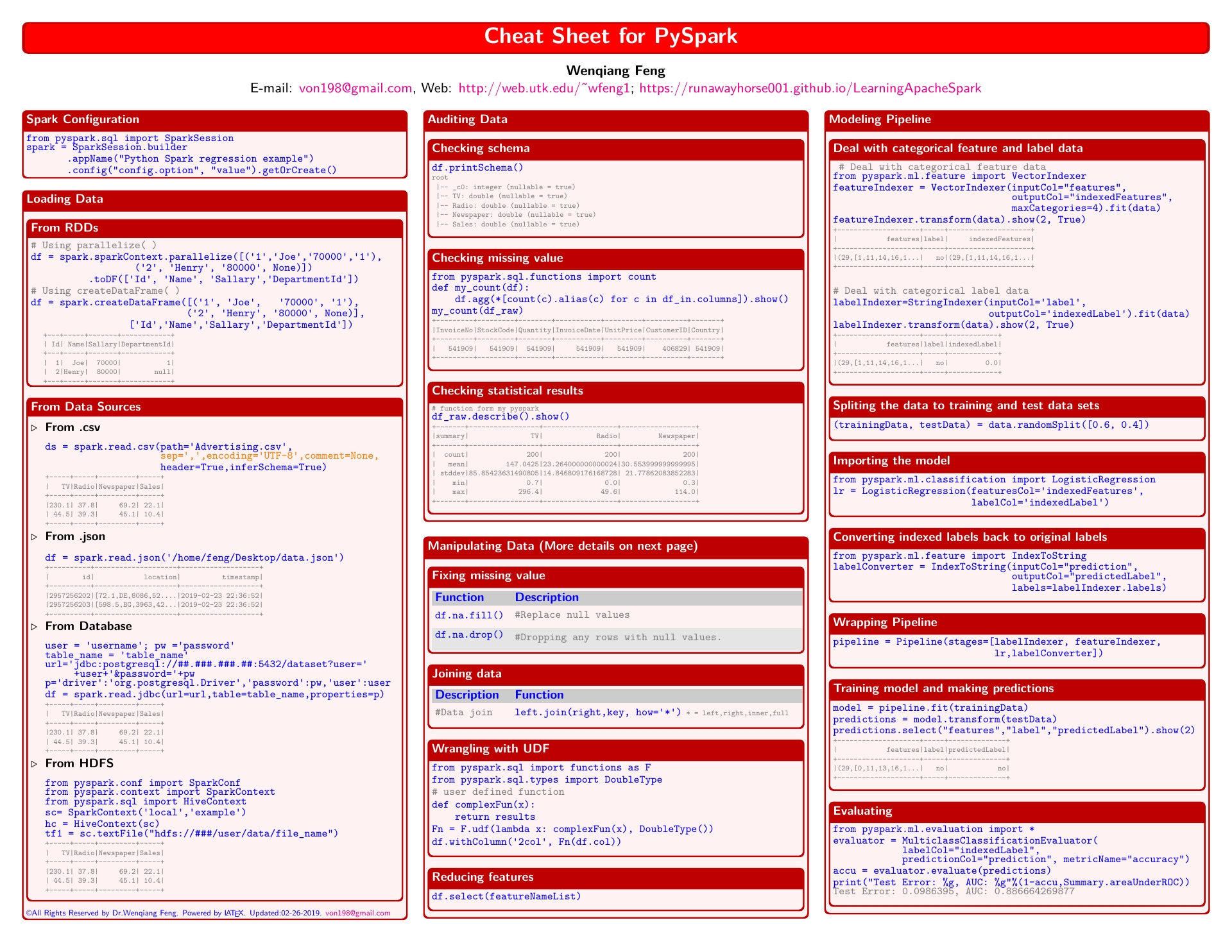

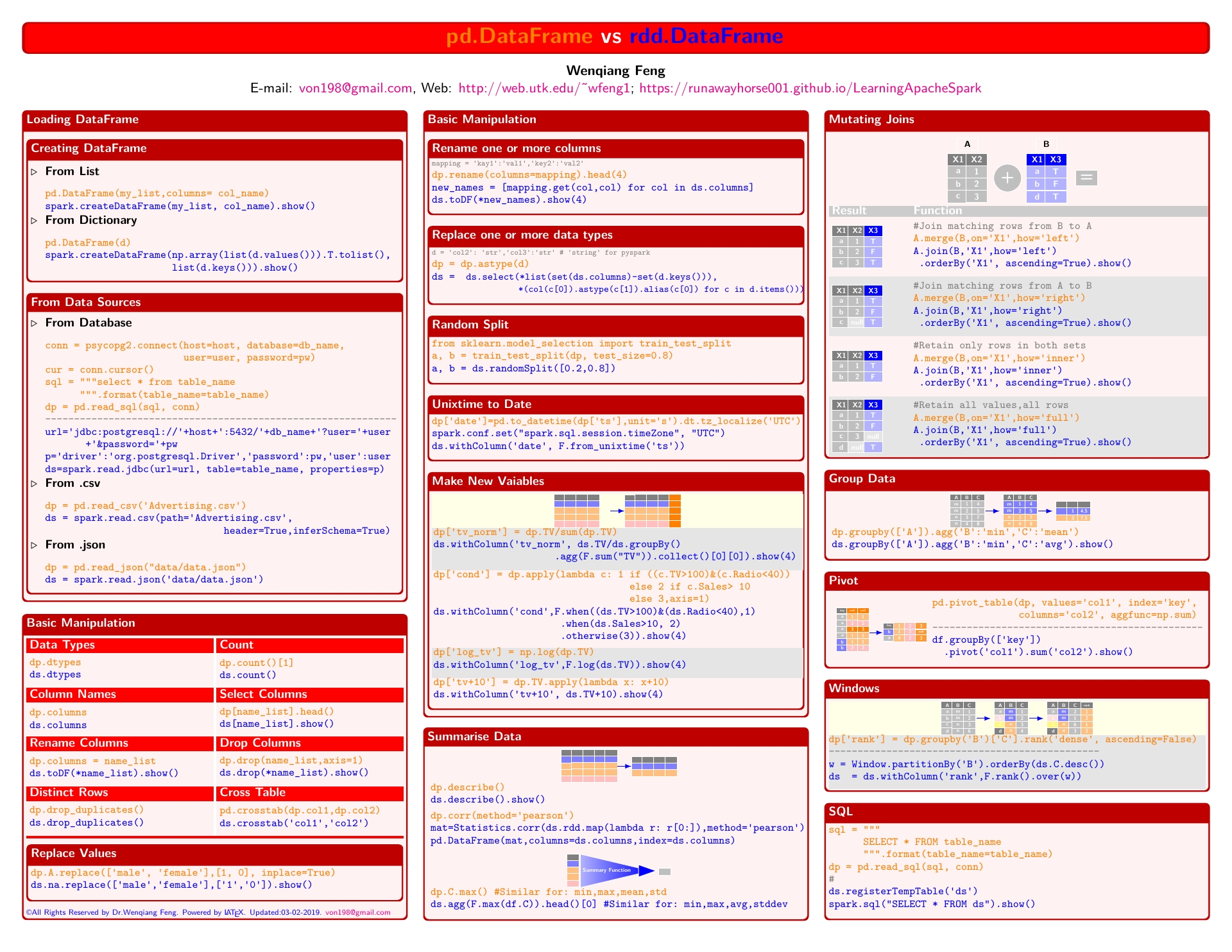

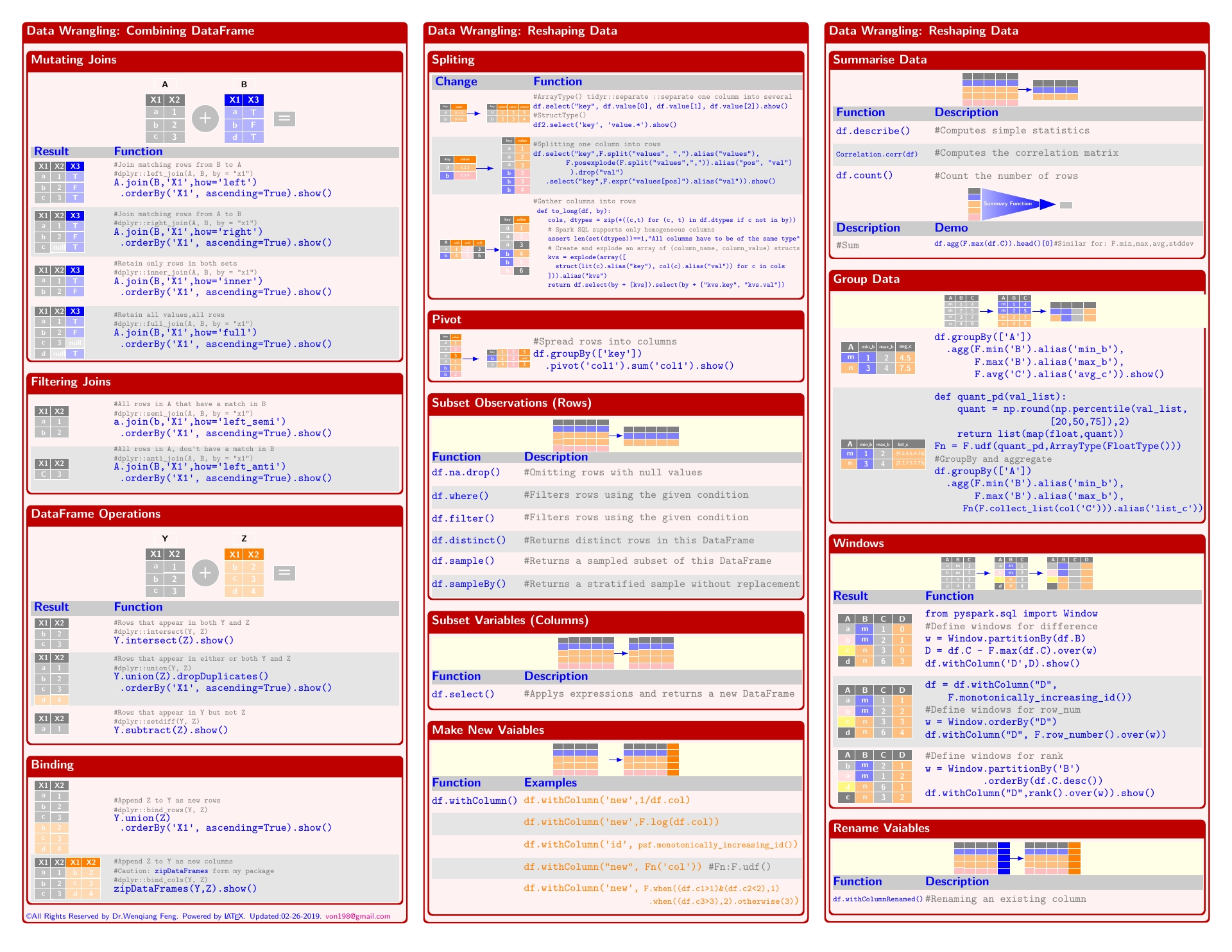

24 My Cheat Sheet Learning Apache Spark With Python Documentation

24 My Cheat Sheet Learning Apache Spark With Python Documentation

24 My Cheat Sheet Learning Apache Spark With Python Documentation

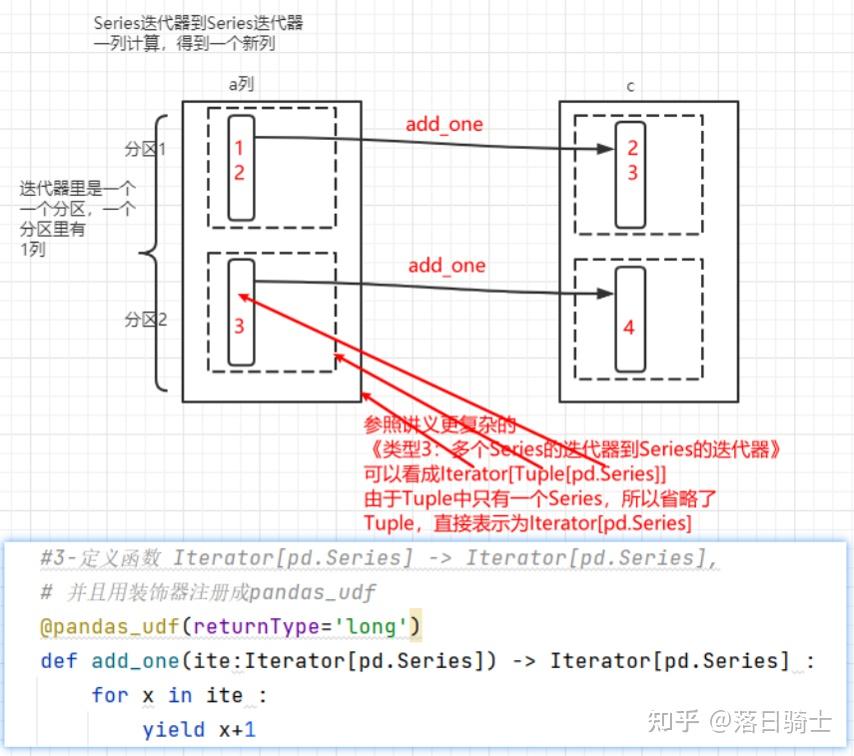

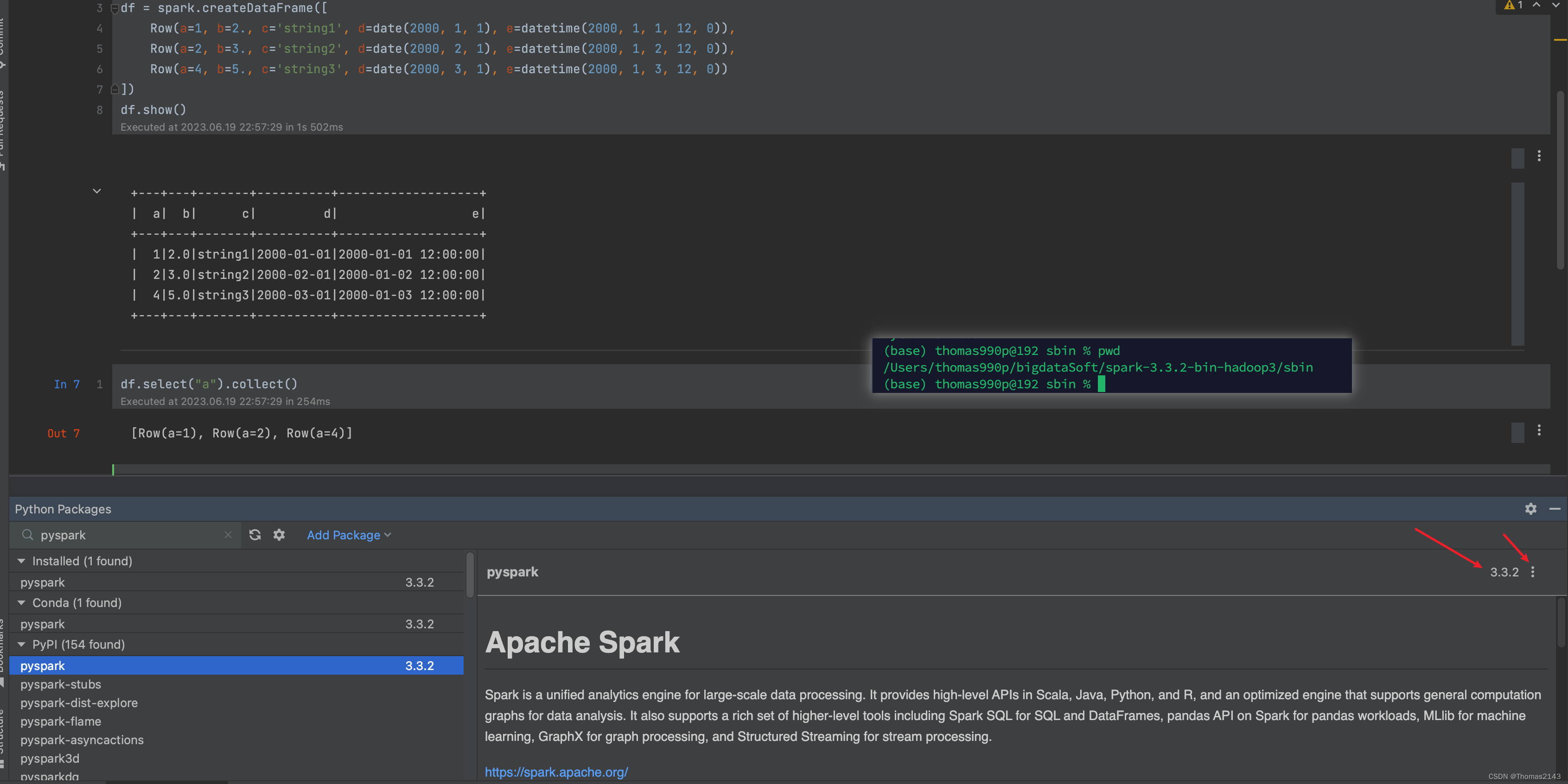

PySpark Pandas udf 4

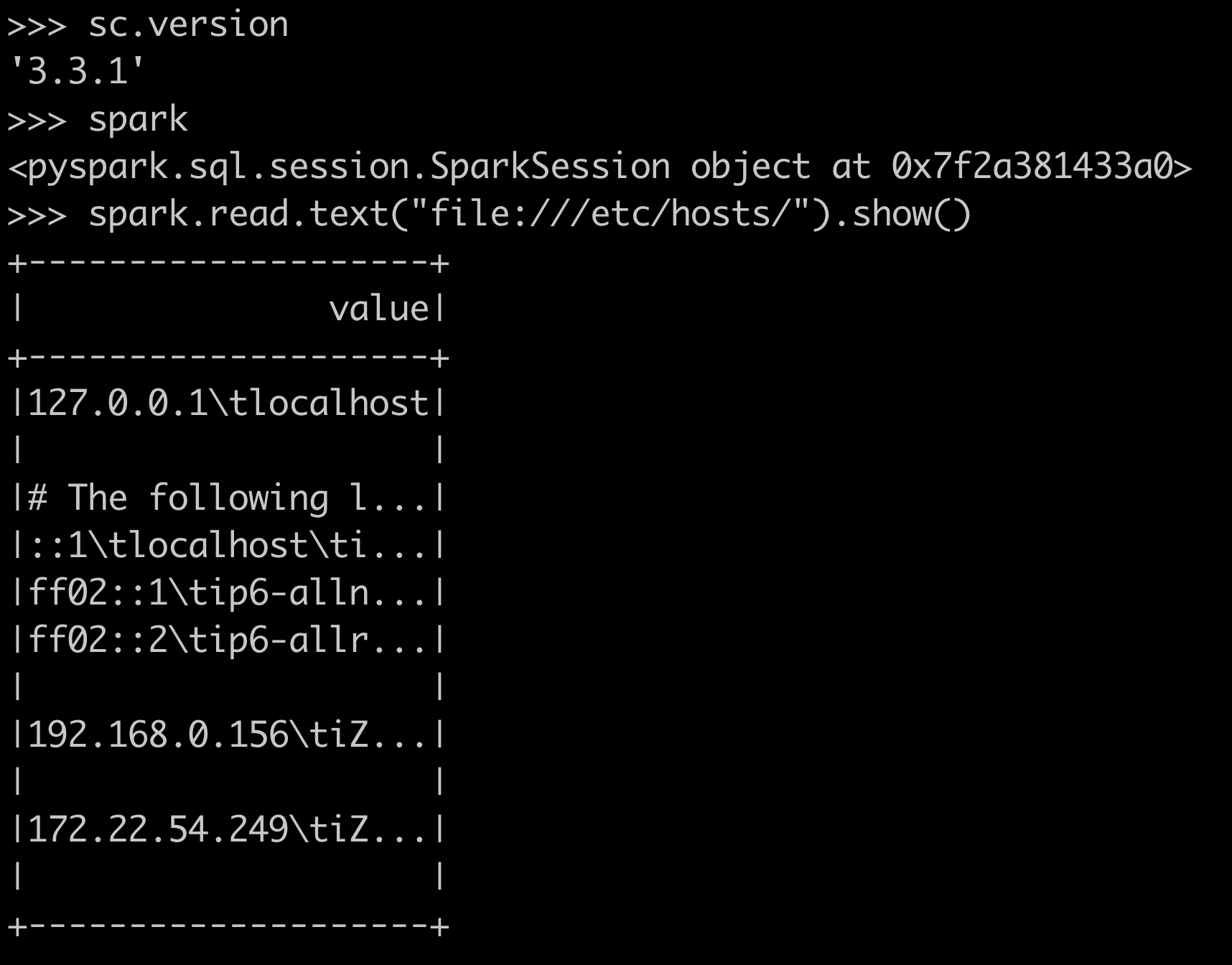

2023 Spark PySpark

PySpark Transformation Action

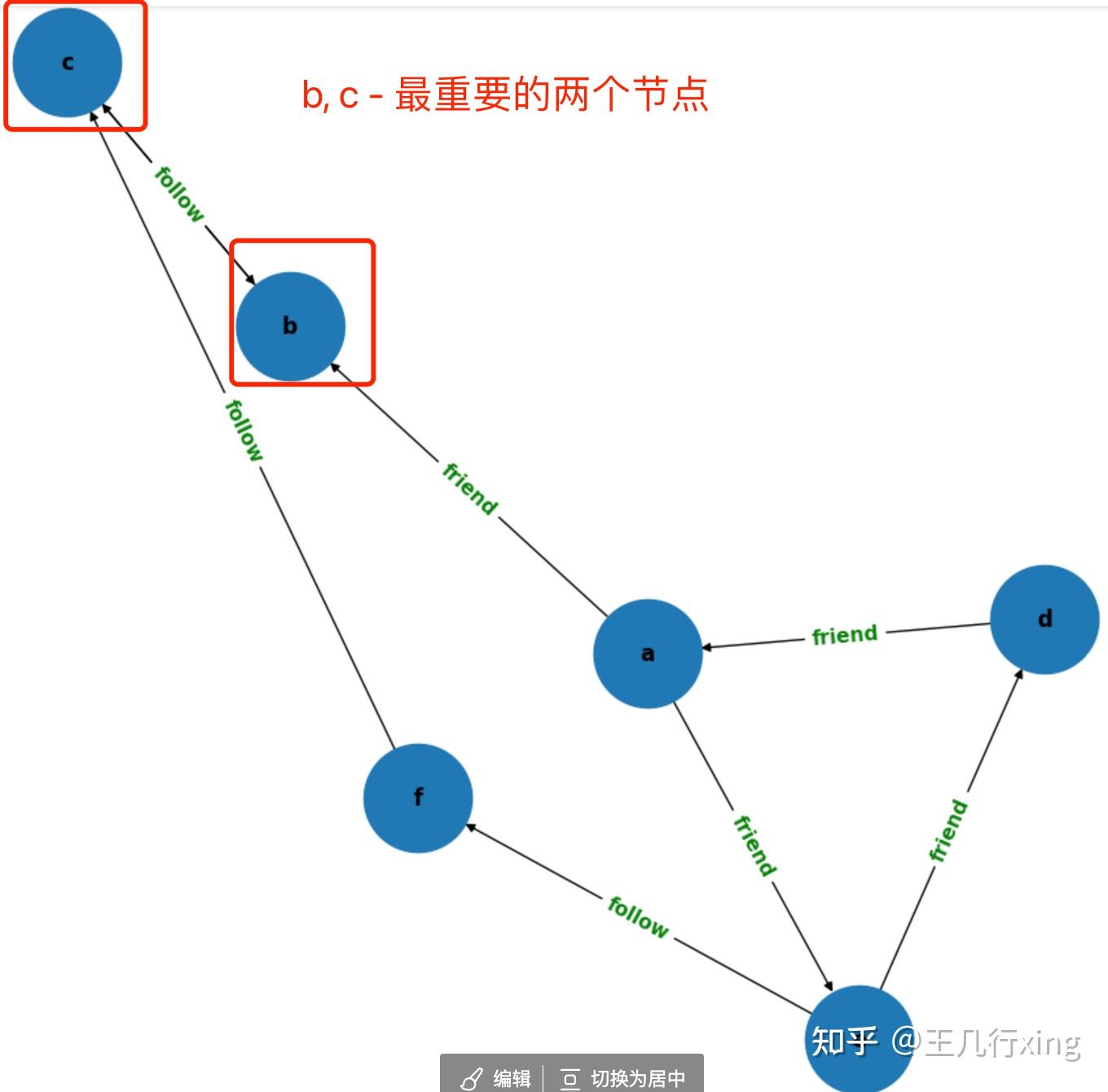

PySpark GraphFrames Databricks

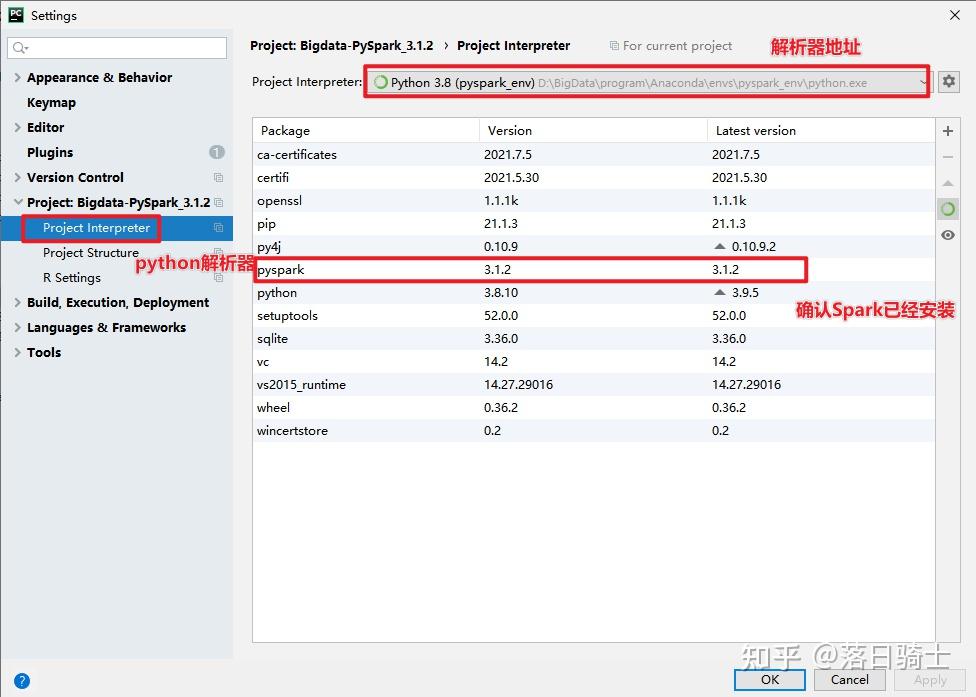

Python Spark

pySpark String integer

Pyspark Py4JError An Error Occurred While Calling O44